RISE: Interactive Visual Diagnosis of Fairness in Machine Learning Models

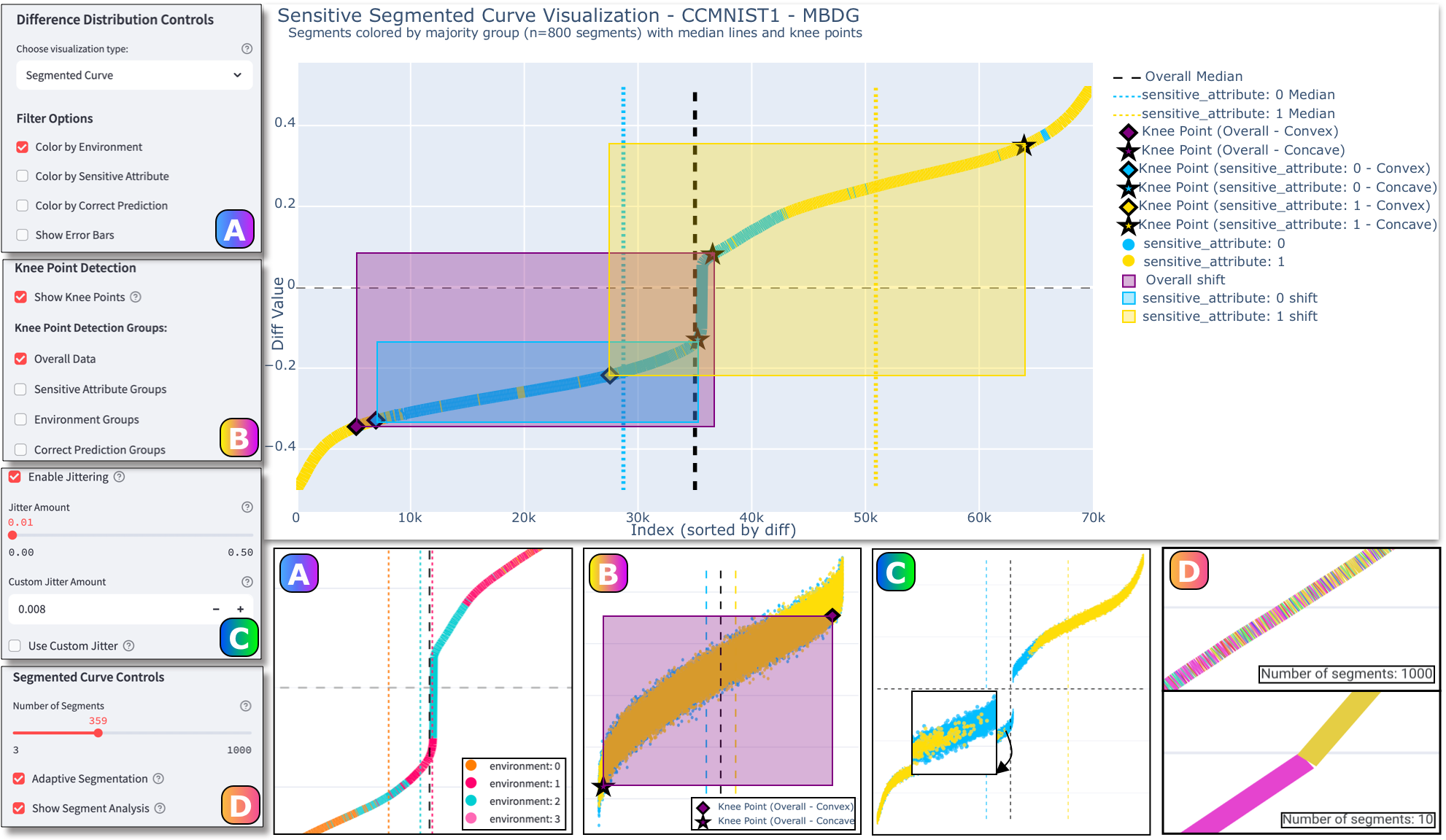

RISE (Residual Inspection through Sorted Evaluation) is an interactive visualization tool for post-hoc fairness diagnosis under domain shift. Evaluating fairness with scalar metrics alone is difficult: aggregate statistics obscure where and how disparities arise, especially when a model looks “fair” on average but exhibits severe bias for specific subgroups. RISE addresses this by turning sorted signed residuals (prediction minus label) into interpretable visual patterns and linking them to formal fairness notions.

Demonstration Video

If the video does not play in your browser, watch or download it on Dropbox.

The system plots residuals by rank and uses median alignment, twin knees (convex and concave inflection points), and adaptive segmentation to reveal localized disparities, subgroup differences across environments, and accuracy–fairness trade-offs that single-number metrics miss. Three residual-based indicators—F_mean (median alignment), F_shift (knee percentile disparity), and F_acc (knee magnitude disparity)—complement standard metrics (e.g., Accuracy, Demographic Parity, Mean Difference) and support more informed model selection. RISE is aimed at ML practitioners deploying models in real-world settings and at educational use for understanding fairness mechanisms beyond scalar dashboards.

Key Results

- Residual-based indicators: F_mean (group median alignment), F_shift (horizontal knee disparity), and F_acc (vertical knee disparity) for interpretable fairness diagnosis beyond scalar metrics.

- Twin-knee analysis: Convex and concave inflection points on sorted residual curves reveal where error regimes change; mismatched knees across subgroups expose localized bias.

- Accuracy–fairness trade-off visualization: Interactive comparison of algorithms (e.g., IRM, MBDG, IGA) on datasets such as BDD100K and ccMNIST, showing how high accuracy can hide distributional disparities.

Team

Code and Data

- RISE Repo

- Live Demonstration via Streamlit

- Datasets: BDD100K, with weather/time-of-day annotations.

Preprint

- Chen, R., Grant, C.. RISE: Interactive Visual Diagnosis of Fairness in Machine Learning Models. Preprint (arXiv)