Comic Book Interpretation

Comics and manga are popular storytelling media, and research indicates that the popularity and sales of these types of book are only poised to grow. Meanwhile, no system exists to scan and read these popular media to visually impaired users, posing an accessibility problem for them.

To address this need among visually impaired and blind users, we investigate the ability of Vision Language Models (VLMs) to interpret comic book media gathered from sources in the public domain. Our goal is to inform design decisions for screen reading software with a feature that will scan comic books on a user's screen and read them a VLM's interpretation of the book.

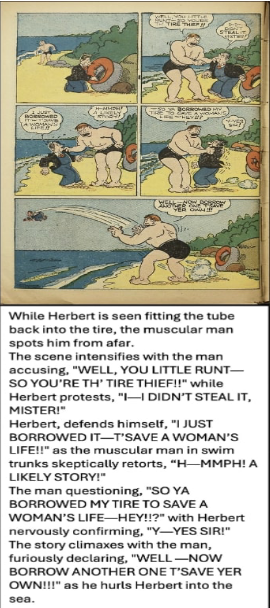

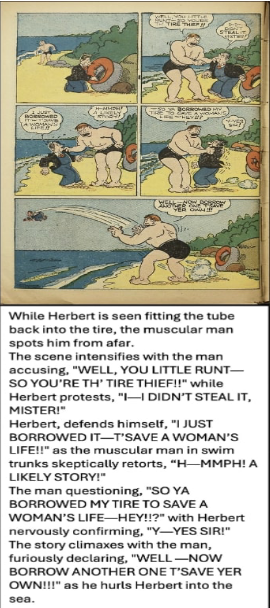

We have constructed a benchmarking dataset to evaluate VLMs' ability to scan and interpret comic books for visually impaired users. The image at the top of this page is a cropped version of a sample image from the dataset and the human-written groud truth interpretation against which machine interpretations are compared. The dataset and the results gathered. The full image is shown below.

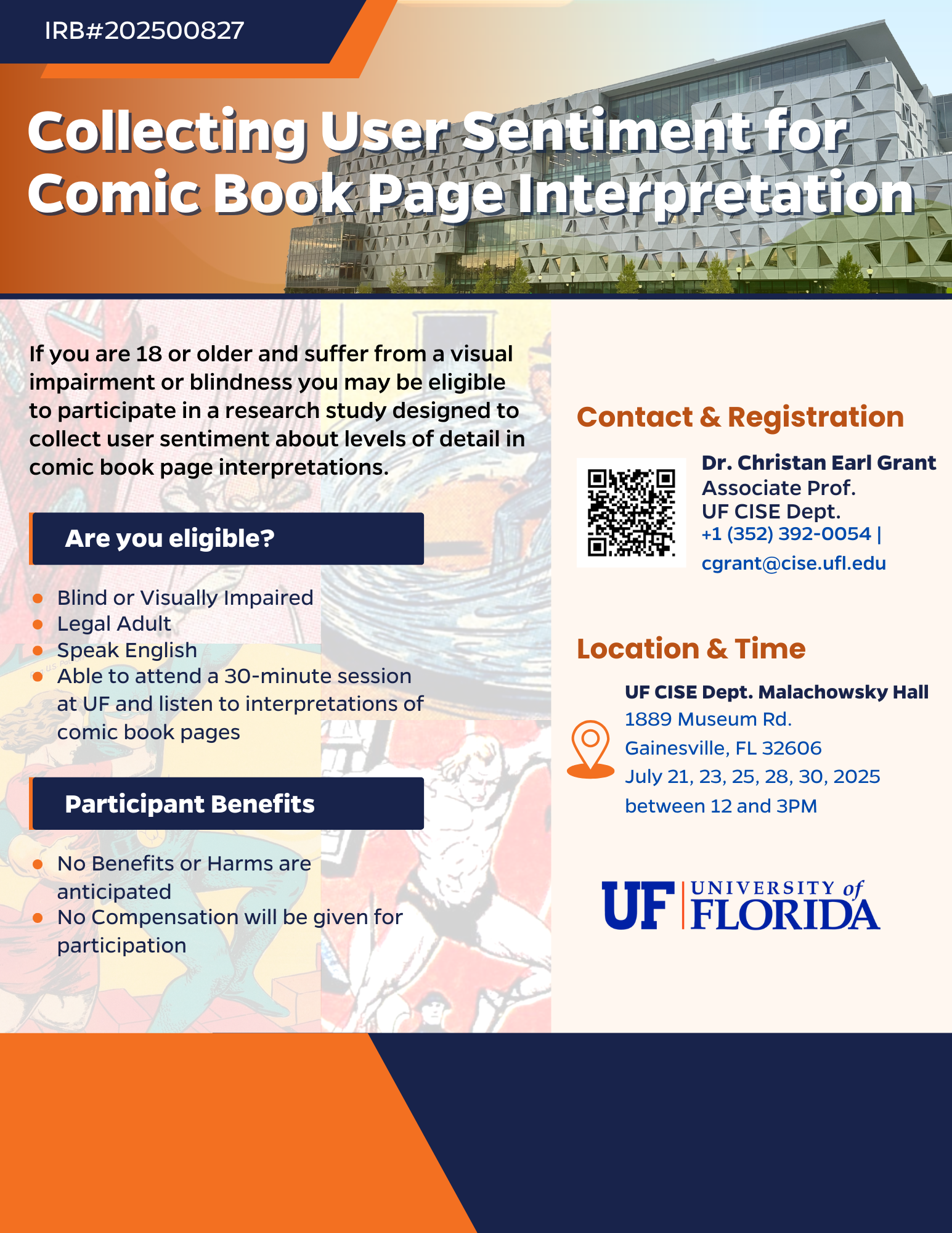

In the Summer of 2025, our team planned a user study investigate user preferences for varying levels of detail in the comic interpretations. The flyer below was distributed to attract participants.

Demo

Forthcoming

Links and Resources

People

Repo(s) (Contact UF Data Studio admin. for access.)

Publications

- Forthcoming