From Mixtec to Medicine

At the beginning of the Spring 2024 semester I traveled from Gainesville to Atlanta on an invitation to Hacklytics, a 36-hour data-science hackathon hosted by the Data Science Club at Georgia Institute of Technology.

Overview

I had an amazing experience learning about the latest technologies from AI veterans, building custom deep learning models to solve real-world problems, and connecting with talented data science students. In this post I highlight some of the biggest advantages that my time at the UF Data Studio provided me during the hackathon, and also some new techniques from the hackathon which I plan to incorporate in future research as well.

Environment Setup

At the start of the hackathon, we were given various tools to help complete our project. This included Intel Developer Cloud (IDC), a virtual IDE with free compute to train our machine learning models. One of the initial challenges was to set up IDC in our environment and demonstrate a successful run of one of the sample notebooks. I decided to work on a notebook that dealt with Large Language Model (LLM) finetuning, which was a key component for our team’s project idea.

Unlike the other sample notebooks, there were unintended bugs and environment issues that prevented successful training after the first run. I was able to resolve these issues early into the challenge due to similar environment issues I encountered while running virtual notebooks at the UF Data Studio. After presenting this to the Intel team that was on-site to validate completions, they were super excited and mentioned that even their own team members hadn’t been able to resolve the issues up to that point.

Project Overview

Diving deeper into the project, my team wanted to create an assistant app that provided better medical recommendations to individuals who can’t afford to see a doctor right away. This resource would also mitigate medical misinformation that patients may encounter on search engines like Google.

Our project, Medvice, consisted of two main components

- Medvisor - an AI assistant that provides recommendations in response to patient queries

- Specialties - finetuned models for specific downstream tasks such as skin cancer identification.

Vision Transformers

My research at the UF Data Studio has been highly focused on finetuning Vision Transformers to classify Mixtec figures. Going into the hackathon, I was able to incorporate prior knowledge on transformer architectures, applications towards image datasets, and their implementation using Hugging Face. This proved to be an amazing benefit as I was able to complete the pipeline for skin cancer identification in the first few hours of the hackathon, leaving extra time to focus on the AI assistant. Our classifier also performed extremely well on test datasets, identifying benign and malignant tumors with over 90% accuracy.

LLM Finetuning

For the AI assistant, we proposed a cascade model architecture which consists of two fine-tuned LLMs

- the first being tasked with identifying Fast Healthcare Interoperability Resources (FHIR) data medically relevant to a patient’s query, and

- the second being tasked with incorporating the patient-level data alongside a medical knowledge base to answer the query.

We hypothesized that this model would perform better than single-model architectures of similar size due to its finetuning and sanity checks during information transfer. This idea is inspired by the intuition behind real-life doctors, which use both your medical history and their own medical knowledge to provide recommendations, as opposed to just using the latter.

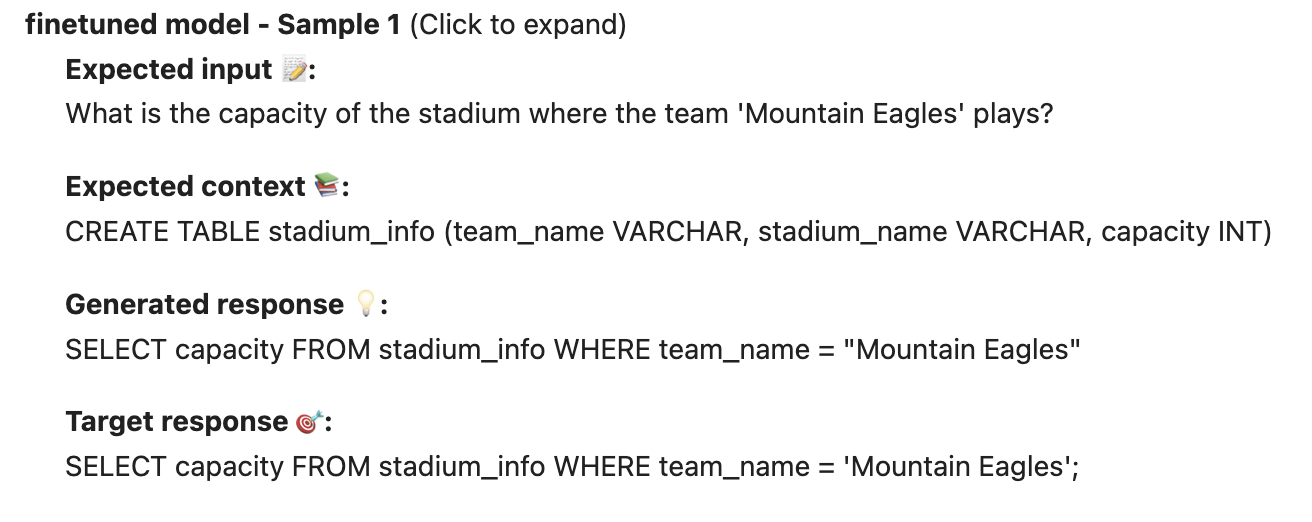

Despite my lack of experience with LLMs, during the hackathon I was able to experiment with a technique known as Quantized Low-Rank Adaptation (QLoRA) which was introduced in the Intel notebook from earlier. This technique allows one to finetune LLMs without needing access to the large compute that initially trains them. By updating only the most-important weights for a desired downstream task and also compressing those weights, QLoRA allowed my team to successfully finetune Meta AI’s LLaMA-7B into a text-to-SQL model. Given a natural language query and a complementary relational schema, our model provided SQL syntax which could be used to extract the FHIR data for our application.

Future Research

After seeing the potential for LLM finetuning on limited compute, my future research goals are to help incorporate techniques such as QLoRA into the existing LLM pipelines we have at the UF Data Studio. The hope is that this finetuning can exceed the performance of base LLMs while also challenging the performance of finetuned ViTs already in place. There is also potential to extract semantic information from the Mixtec language beyond figure classification alone.

Conclusion

I had an amazing journey during Hacklytics and learned so much from the inspiring students I got to work with. I’m also super thankful for my time at the UF Data Studio which has given me an edge in so many technical areas of my life.

If you’re interested to learn more about our project or see more results…

Check out our Streamlit demo here: https://medvice.streamlit.app/ Check out our full project here: https://devfolio.co/projects/medvice-2db8 Check out our GitHub here: https://github.com/demonicode/Medvice