Text datasets containing disfluencies

Written by: Keerti Banweer

"Alexa, How is the um weather today?" and get a reply - "Sorry, I did not get that?". We then repeat the question with clarity and fluency to get the correct response. This situation might seem familiar to many of us whenever we ask a question by correcting ourselves in the middle of completing the question and not getting an expected response from the voice assistant. Before leaving the house, this conversation may be pretty standard for people with voice assistants. Voice assistants have gained much popularity in the fast-paced generation where time is so precious, and we can talk to the speaker to answer some queries without spending time typing them. When talking to the voice assistants, humans can have pauses or corrections in the middle of the question because we are not always sure what we will say. We might not have trouble understanding that shift in the question, but machines can get confused.

Voice assistants are helpful and can be pretty accurate, but the imperfections in casual speech need to be understood by the machine to improve the user experience. The above conversation brings us to the discussion about addressing the imperfections in the questions and the robustness of the Question Answering task.

Question Answering defines a task in NLP where a question is answered automatically by a trained NLP model. The performance of such QA models is essential in the real world with the increasing popularity of voice assistants. If we type in the question without any error in a QA task, the model's output will be per the expectation, considering the model's performance. However, in scenarios of translated questions from speech to text, the translation cannot always be fluent as humans have interruptions in casual speech. For voice assistant systems, the question input for the QA application can have noise and disruptions, leading to the incorrect model prediction.

The popularity in applications such as Hugging face provides a vast collection of state-of-the-art NLP models.

Such trained NLP models utilize clean or fluent datasets for their training to generate state-of-the-art results. However, performance on the datasets with some noise can impact the performance, and that is why we need more research in creating datasets with noise and training NLP models to be robust enough toward such noise. This blog will discuss a modified Question and Answering dataset, SQUAD2.0 by 1, where contextual disfluencies are added to this dataset to evaluate the robustness of the question answering models.

We will discuss one of the related papers DISFL-QA: A Benchmark Dataset for Understanding Disfluencies in Question Answering that examines noise in the textual dataset because of disruption in the flow of speech.

Disfluencies2

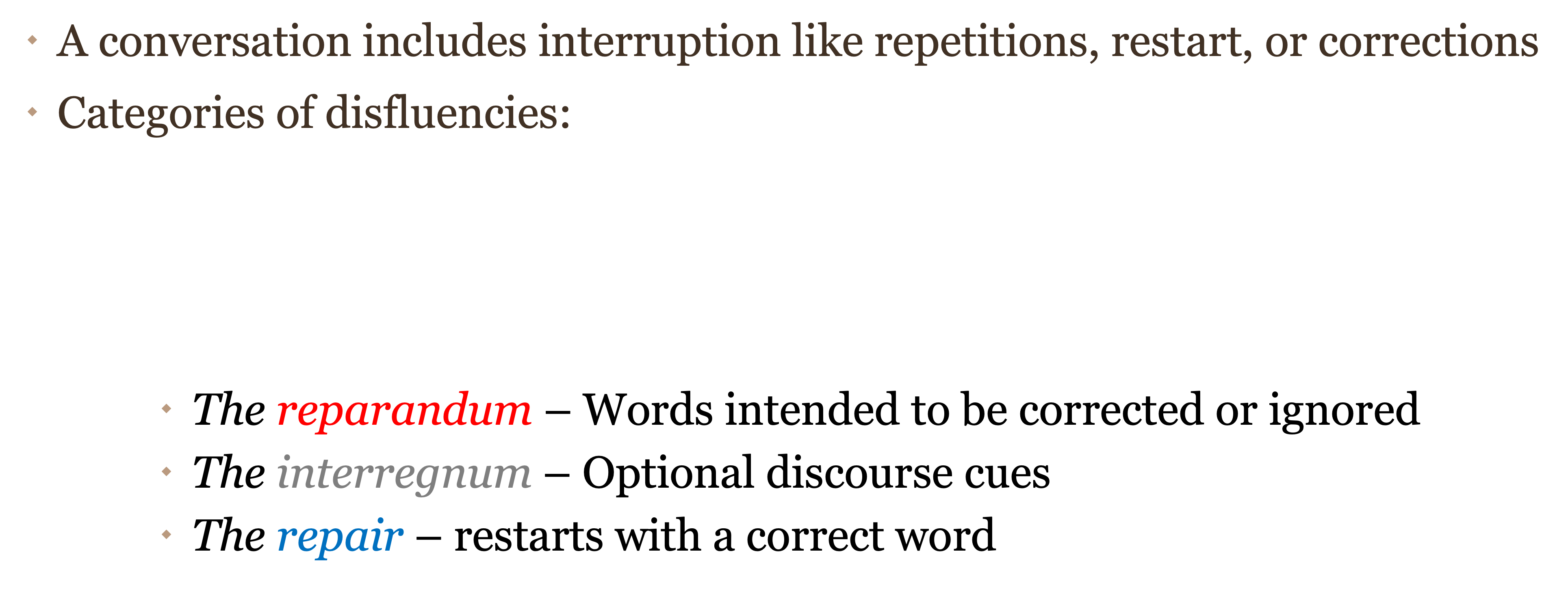

Listening to an audiobook can be a different experience from listening to a podcast. When we listen to audiobooks, they are recorded in a very noise-free environment by a fluent reader with a script. However, the podcasts or some TV interviews can have casual conversations and are not premeditated where speakers are not reading from a script. That means such conversation can have interruptions. A similar situation is when using voice assistants, where humans might correct what they are trying to ask; thus containing interruptions in the questions. Therefore, recording and converting a casual or live conversation to text is not always clean or fluent and can have interruptions like repetitions, restarts, or corrections 1. This concept is known as disfluencies in the text 2.

Figure 1 displays different conventional categories of Disfluencies2.

1. The reparandum (in red): Words intended to be corrected or ignored.

2. The interregnum (in gray): Optional discourse cues to show that a correction occurs in the following sequence.

3. The repair (in blue): restarts with the correct word.

Data in its raw form contains much noise that needs to be cleaned or processed before using the data for training a deep learning model. When collecting a large amount of textual data from sources like podcasts or live interviews, researchers encounter the critical challenge of addressing the noise added using ASR systems. The existing NLP models are trained on clean and fluent datasets and may not be robust enough towards noises such as disfluencies. This paper by 1 introduces a dataset with the disfluencies to train or fine-tune the existing model, making it more robust.

Overview of the paper

Aditya Gupta discusses that disfluencies in the textual dataset can impact a Question and answering model's predictions. For the training of the existing NLP models, researchers utilize clean and fluent datasets. The authors discuss that the datasets, including interruptions, can improve the robustness of the existing state-of-the-art models. This paper introduces a modified dataset, DISFL-QA, containing contextual disfluencies 2 in the collection of questions capturing the interruptions similar to real-world scenarios. The human raters (linguistic experts) add the disfluencies to the questions to an existing dataset, SQuAD2.0. The modified version of the questions should be semantically equivalent to the original question and should be natural with meaningful distractors.

The experiments discussed in this paper explore such datasets' importance in developing robust NLP models. The paper showcases a comparison between DISFL-QA and Switchboard. In the Switchboard corpus, only 5.9% of the words are disfluencies, and most of such disfluencies are repetitions. The following section will discuss the DISFL-QA Dataset and how the authors address different categories of disfluencies in modifying the SQuAD2.0 dataset.

DISFL-QA Dataset

DISFL-QA: is a benchmark dataset for Understanding Disfluencies in Question Answering. The authors of the paper 1 proposed a new dataset DISFL-QA that captures different categories of disfluencies in the questions of an existing question and answering dataset. The questions are from a collection in the dev version of the SQUAD-v2 dataset (The Stanford Question Answering Dataset) by Rajpurkar 3. This reading comprehension dataset consists of questions and answers to a collection of Wikipedia articles. This dataset has 100,000 question-answer pairs, with 50,000 unanswerable questions.

The DISFL-QA dataset is a modification of the dev version of an existing question and answering dataset called SQuAD2.0, and each question in the altered dataset contains contextual disfluencies.

The authors alter the questions from the original dataset to include contextual disfluencies; with the help of linguistic experts. The different disfluencies addressed in this paper are interrogative restart, entity correction, adverb/adjective Correction, and entity type correction.

Conclusion

Language models are not robust to disfluencies. Contextual heuristics partially recover performance. Understanding disfluencies is vital in improving human-machine communication.

Footnotes

-

Gupta, A., Xu, J., Upadhyay, S., Yang, D., & Faruqui, M. (2021, August). Disfl-QA: A Benchmark Dataset for Understanding Disfluencies in Question Answering. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021 (pp. 3309-3319). ↩ ↩2 ↩3 ↩4

-

Shriberg, E. E. (1994). Preliminaries to a theory of speech disfluencies (Doctoral dissertation, University of California, Berkeley). ↩ ↩2 ↩3 ↩4

-

Rajpurkar, P., Zhang, J., Lopyrev, K., & Liang, P. (2016). Squad: 100,000+ questions for machine comprehension of text. arXiv preprint arXiv:1606.05250. ↩