CIS 6930 Spring 25

This is the web page for Data Engineering at the University of Florida.

Using NVIDIA DGX

Before you can use DGX, you need to have an account on DGX Cluster. As part of the class you have been given access to the DGX cluster. This document will point you to resources that will help you understand the clusrter and get you started.

The NVIDIA DGX cluster is a high-performance computing cluster at the University of Florida. It is a shared resource that is available to all UF faculty, staff, and students. The cluster is managed by the Research Computing department at UF. It is similar to HiPerGator and open on demand HiPerGator but it is a distinct system. If you are not familiar with hipergator, you can review the links above or visit the getting start page from the data science course here.

Accessing DGX

To use DGX you will use your Gatorlink credentials. You cannot from SSH write away, you must access it from the web interface. You can only conect from the UF network or using the UF CISCO VPN.

Once you are connected to the UF network or the VPN, you can access the DGX cluster by going to https://dgxcloud-ood.rc.ufl.edu/. You can log in using your Gatorlink credentials.

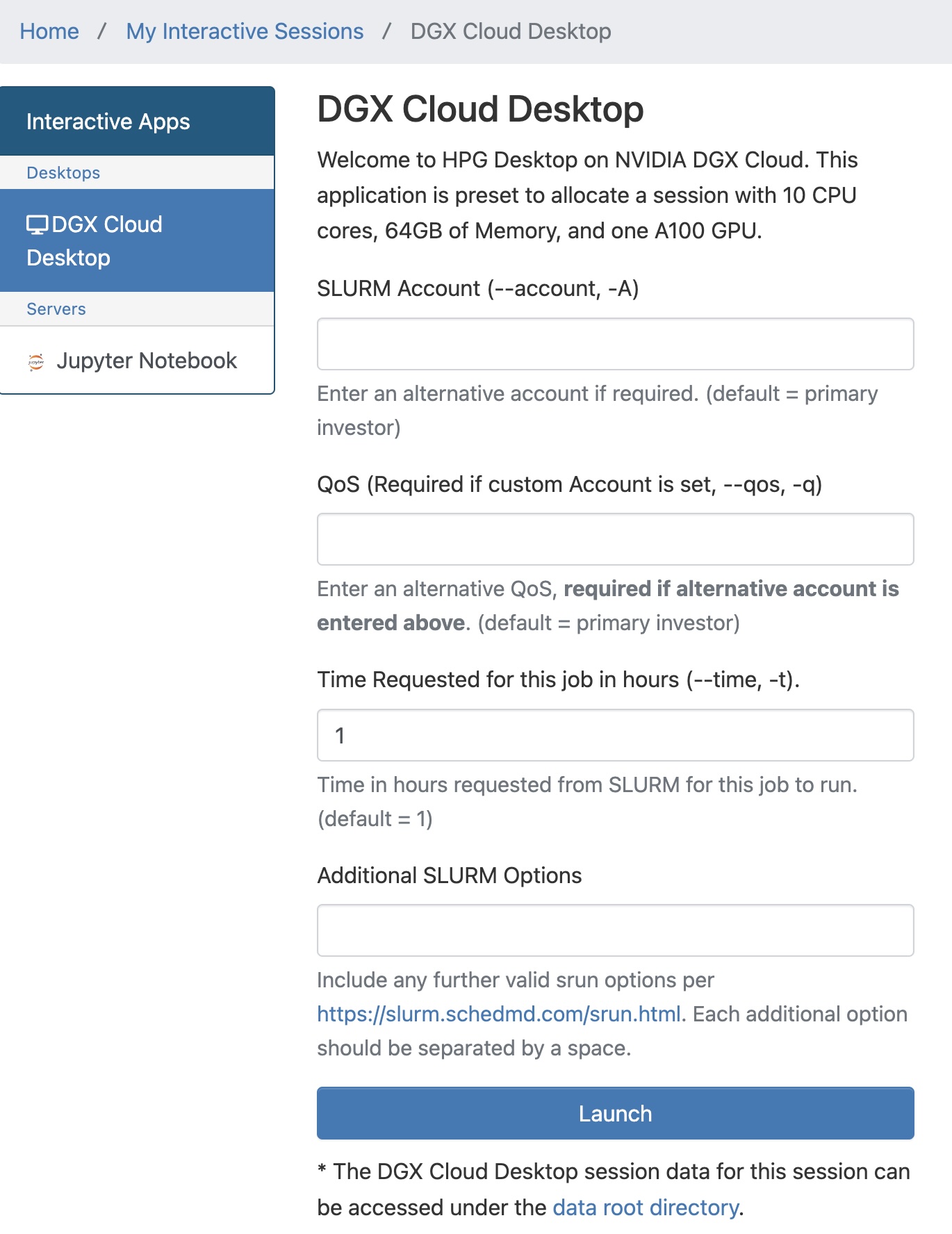

You can access DGX Cloud desktop or Jupyter Notebook from this interface. When you select either of these options, you will be prompted to select the resources you need and start the desktop or notebook. You should create the minima resources you need to complete your work.

For the slurm account, enter your gatorlink username.

For the qos, enter cis6930 the group id for the class.

Try to select as few hours as necessary under time.

You are preset to request allocate a session with 10 CPU cores, 64GB of Memory, and one A100 GPU.

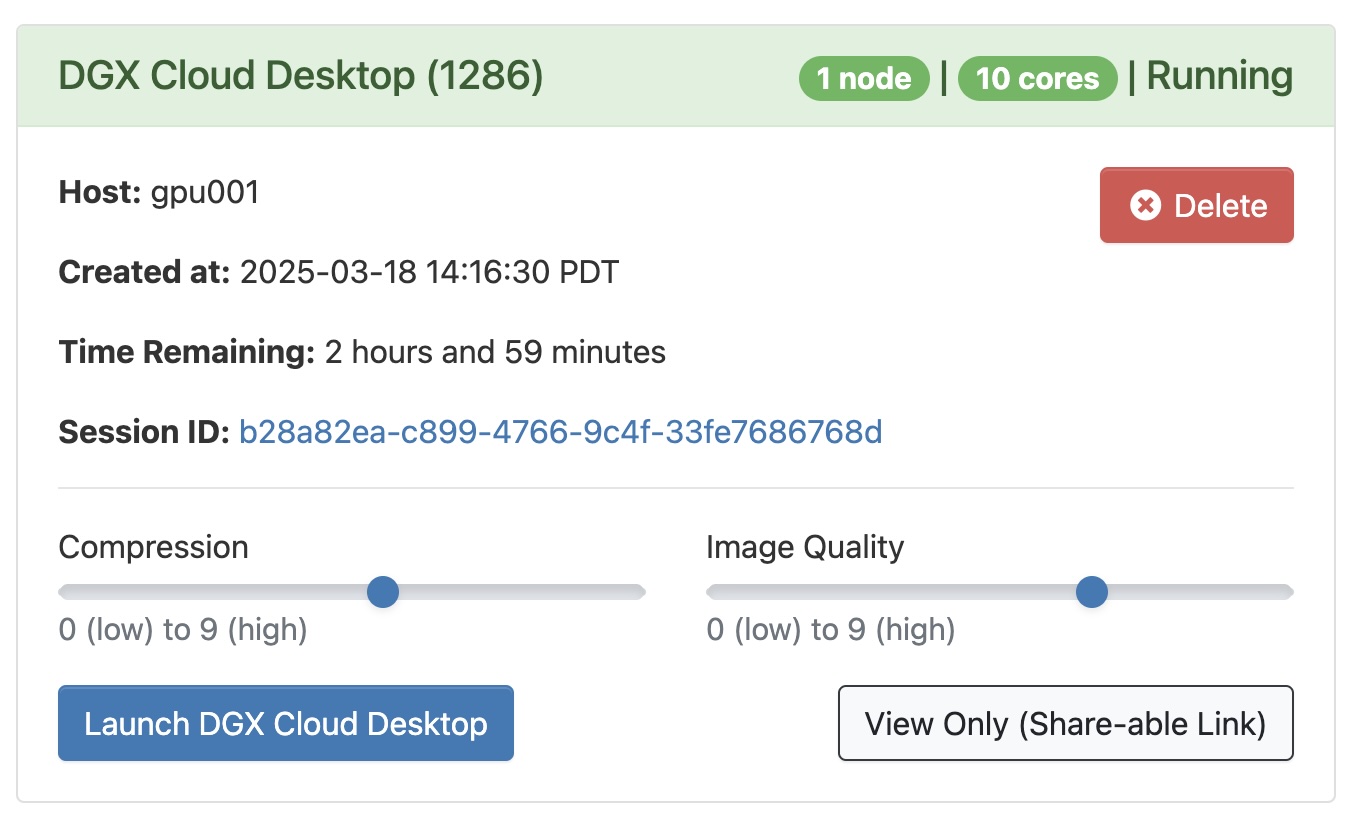

After launching the notebook, your request will be added to the queue. The shorter the request, quicker the queue allocation. When the resourcesa re allocation you can launch the machine and view the machine in a desktop or terminal.

Select Launching the DGX Cloud Desktop to view the desktop in your browser window.

Using Jupyter Hub

Accessing the DGX Jupyter hub is similar to the deskop interface. Except you will need to enter the number of CPU cores, Memory, and GPU you need. Select the smallest number of resources you need to complete your work.

Using DGX

For complete information on using DGX for this semester, visit the Research Computing Documentation.

Connecting with SSH

IF you would like to connect to DGX using SSH, you can follow the instructions at DGX SSH.

Connecting with SSH will bring you to the DGX login node.

From there you can submit jobs to the cluster using the SLURM scheduler.

You will need this group id to execute slurm commands by adding the command --qos=cis6930.

You can check your group by running the command id.

id gatorlink

Add the lines below for sbatch commands.

#SBATCH --qos=cis6930

You can use the following command to get access command line access to an instance with a GPU node, with 2 CPUs and 16GB of memory.

srun --qos cis6930 --ntasks=1 --cpus-per-task=2 --mem=16gb --partition=gpu --gres=gpu:1 -t 60 --pty bash -i

The home directory will be is /home/gatorlink. Where gatorlink is your Gatorlink username.

You also have access to the class folder in the case there is a need to share resources.

You have a folder with your user name with the primary name of your user name in the location /lustre/fs0/cis6930/.

This semester, 32 CPU, 16 GPU and 2Tb Storage allocations have been allocated to class group cis6930. This allocation expires upon the Date of Commencement for 2025 Spring semester: 04 May 2025. Back up your data it will get deleted without warning. If you need more resources please contact me.

Video on Using Slurm

Tutorial on using SLURM

Acceptable Use Policy

You must agree to the following acceptable use policy to use the resources on DGX Cloud.

ACCEPTABLE USE I acknowledge that the access to the HPC resources operated by UF Research Computing is subject to the UF Acceptable Use Policy at https://it.ufl.edu/policies/acceptable-use/acceptable-use-policy/ and the Research Computing policies at https://www.rc.ufl.edu/documentation/policies/ and that I am responsible for following these policies.

RESTRICTED DATA

I also certify that using restricted data and software on the HPC resources requires extra steps described at UFRC Policies and at UFRC Export Policies, and that I will notify both my account sponsor and the Office of Research (Research Compliance) and Research Computing at

Back to BACK